> ELASTIC TEACHER

// ARCHITECTURE V2: TECHNICAL REVIEW

Scroll down to navigate the architecture slides.

I. CORE DESIGN RATIONALE

[DCL] Microservice Decoupling (LLMOps)

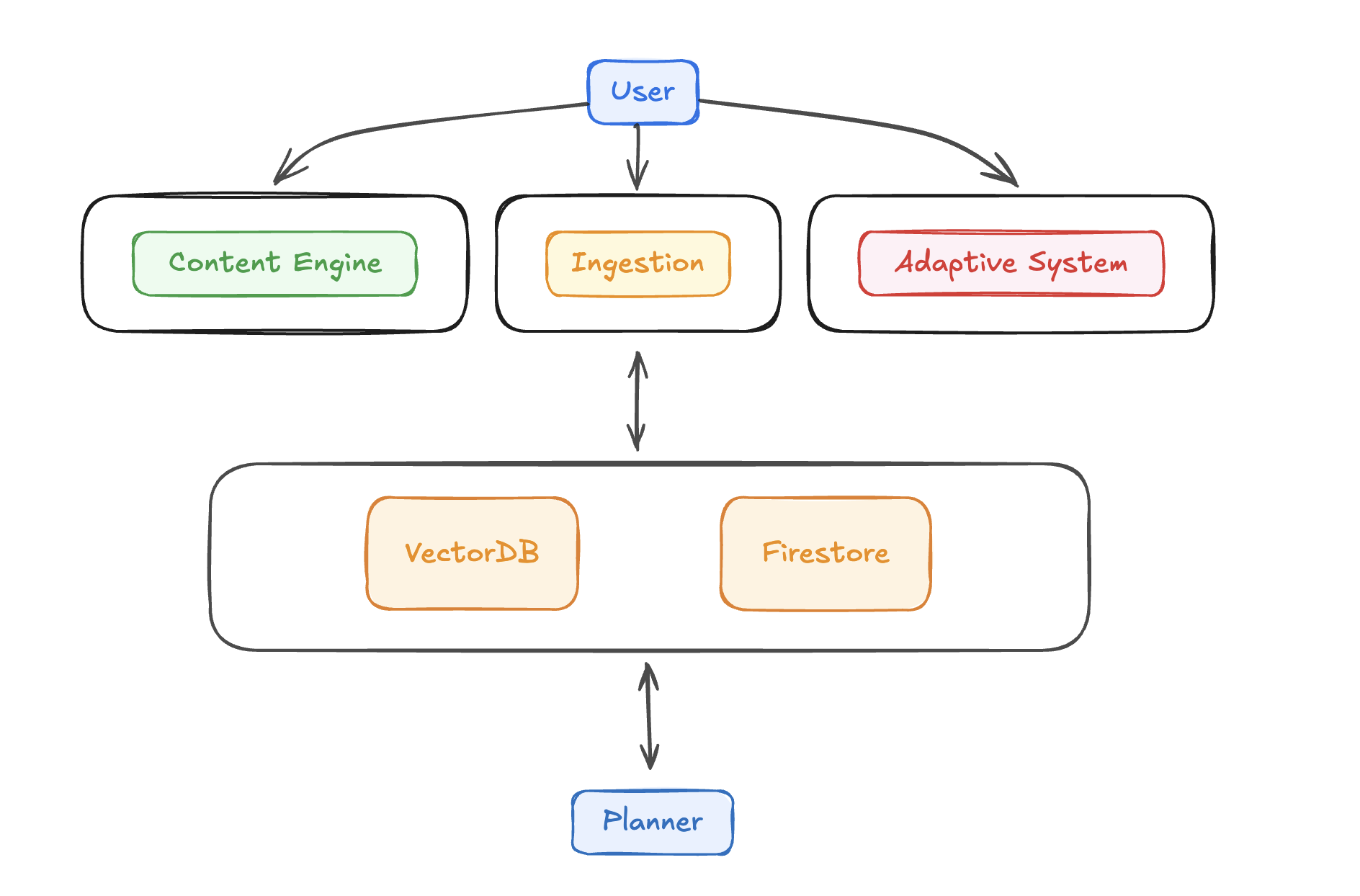

The primary risk in LLM pipelines is the cascading failure of dependent services. By separating DINS, CPS, CGE, and ALS into independent microservices, we establish clear API contracts and dedicated failure domains.

[STATE] Persistence Strategy (Firestore)

Adaptivity requires stateful memory. We delegate all user performance metrics, mastery scores, and the dynamic curriculum structure to Firestore, allowing services to read reliable state and enable complex adaptive triggers.

II. MICROSERVICE FLOW: ADAPTIVE LOOP (DIAGRAM)

III. COMPONENT DEEP DIVE (1/2)

1. DINS - Data Ingestion & Normalization

MANDATE: Robust, idempotent ingestion process. Focus on maximizing retrieval quality.

2. CPS - Curriculum Planning Service

MANDATE: Deterministic structure generation. Must not produce unstructured text.

III. COMPONENT DEEP DIVE (2/2)

3. CGE - Content Generation Engine

MANDATE: Low-latency, grounded delivery of learning artifacts.

4. ALS - Adaptive Learning System (LLMOps)

MANDATE: Real-time state manipulation and rescheduling (the engine of elasticity).

IV. LLMOPS PROOF: STRUCTURED OUTPUT VALIDATION

LIVE DEMO: CGE Structured Quiz Generation

... waiting for output.

V. PHASE GATE PLAN: IMPLEMENTATION ROADMAP

// Phased rollout minimizes architectural debt and proves LLM capabilities early.

-

PHASE 1: RAG CORE FOUNDATION

TARGET: Implement DINS. Build CGE endpoint for static, non-stateful RAG-based quiz generation. GO/NO-GO: Achieve >95% JSON Schema compliance and successful grounding.

-

PHASE 2: STATE INTEGRATION (CPS/FIREBASE)

TARGET: Develop CPS. LLM generates full, structured Syllabus (JSON) and writes it to Firestore. GO/NO-GO: Full decoupling of Knowledge (Vector DB) from State (Firestore).

-

PHASE 3: ADAPTIVE CLOSED-LOOP (ALS)

TARGET: Implement ALS triggers on submission. Deploy LLM-based Short Answer Grading. GO/NO-GO: Prove dynamic curriculum modification in a multi-user environment.

THANK YOU

"Ideas are worthless. Execution is priceless.

So I am not afraid of sharing my ideas"